By Zachary Tessler, Director of Data Science

This White Paper focuses on Digital Twin technology in the maritime industry and the importance of model accuracy and validation, highlighting the barriers that exist to building accurate, useful, and reliable Digital Twins. The paper explains how Nautilus Labs uses machine learning algorithms based on naval architecture and hydrodynamic theory to create models that are interpretable and accurate even when data is less abundant. Finally, the White Paper explores Nautilus’s model accuracy rates and validation methods.

Download the PDF version of this whitepaper here.

Introduction

The use of Digital Twin technology is becoming increasingly common in the maritime industry as Operators seek to improve vessel performance, reduce fuel consumption, and enhance environmental sustainability. With Digital Twins, Operators can monitor and optimize their fleet’s performance, identify potential issues before they become problems, and plan for maintenance or repairs more effectively. However, the modeling techniques that underpin this technology can vary greatly in terms of accuracy and validity, which can significantly impact the effectiveness of the resulting virtual replicas. Nautilus Labs, a leading maritime technology partner, recognizes the importance of ensuring the highest levels of model accuracy and validation in order to deliver reliable and effective solutions to shipping companies. In this White Paper, we will expound on Nautilus’s models and validation processes, accuracy rates, and stringent validation methods.

There are a few key components to Digital Twin excellence. However, they can be challenging to achieve:

- High-quality data: Digital Twins require sufficient accurate data to be effective. If data is missing, incomplete, or inaccurate, the Digital Twin may not provide an accurate representation of the physical asset, resulting in low prediction accuracy.

- Integration: Digital Twins are often implemented as standalone solutions, rather than being fully integrated with other systems and data sources. This can result in incomplete or inaccurate data, as well as a lack of interoperability with other systems. Some Digital Twin creators require users to conform to their data entry standards, which complicates existing workflows and results in resistance to adoption. Getting full business value out of a Digital Twin requires deep integration with existing systems and workflows to successfully drive change management across the user’s organization.

Machine Learning and Artificial Intelligence Built on Naval Architecture and Hydrodynamics and Powered by Human Intelligence

Our Data Science team builds machine learning algorithms that balance complexity and interpretability by leveraging the principles of naval architecture and hydrodynamic theory. Our machine learning models are structured around well-understood physical laws, unlike pure “black-box” models that exclusively operate on observational data. Black-box models excel when data is abundant, which is not always the case in shipping companies, and the underlying system dynamics are poorly understood. In our approach to vessel performance modeling, we utilize physical laws to build models that are more interpretable, maintain accuracy even outside the range of available training data and are accurate even when data is less abundant or unavailable (as is often the case with chartered-in vessels).

Below are some common terms in machine learning, how they are defined, and what they mean at Nautilus.

- Models: A model is a mathematical function that takes input values and produces an output value. We use machine learning and well-established physical and hydrodynamic laws to build models capable of predicting vessel resistance and performance under varying weather and loading conditions.

- Digital Twins: A Digital Twin is a digital representation of a physical ship, created by combining models with a ship’s “current state.”1 It includes detailed information about the ship’s design, construction, and operational characteristics, as well as real-time data about its present condition and performance. A Digital Twin can be used to simulate and analyze a ship’s performance in various scenarios, such as different weather conditions or cargo loads. This information can be used to optimize the ship’s operation and maintenance, and to identify potential issues before they arise.

- Multivariate Simulations: We run simulations based on our Digital Twins to predict how a given vessel will perform in a specific set of circumstances (e.g. weather, draft, speed). Multivariate simulations are mathematical models that use multiple variables or input factors to generate simulations or predictions of a system or process. These simulations can be used to study the impact of different variables on the outcome, and to make predictions about the behavior of the system under different conditions. This allows us to make recommendations that optimize for specific outcomes such as Time Charter Equivalent (TCE), Estimated Time of Arrival (ETA), or a CII grade.

At Nautilus, we are constantly iterating and devoting time and effort to continuously improve our models to maximize accuracy and leverage the best developments in Data Science.

Models and Applications

Digital Twins are typically applied to two broad types of tasks, both of which depend on high-quality underlying models:

- Retrospective Analysis: Models can be used as a comparison against observational data (either historical or current), which is typically the case for performance monitoring, post-cleaning Return on Investment (ROI) assessments, hull coating or retrofit ROI assessments, and more.

- Predictive Forecasts: Models can be “future-looking,” such as where Digital Twins are used to forecast vessel performance, in conjunction with weather forecasts, for better operational and commercial decision-making.

Nautilus uses a first-principle-based modeling framework. We start with well-understood naval-architectural principles, then use historical sensor data and machine learning to specialize and tune those models to make accurate predictions for a specific ship. These predictions can be used by our Digital Twins to, among other things, estimate the real-time impact of fouling on vessel performance.

First Principles

In Physics, “first-principle” modeling refers to deriving equations from the most basic physical laws possible. Some examples of these principles include:

- A ship moving at a steady speed must deliver the same amount of power through the propeller as is lost to resistance.

- The components of resistance include hull friction, form drag, wind resistance, wave-making resistance, et cetera.

- Wind resistance is a function of wind speed, wind direction, air density, ship heading, and ship geometry.

Such principles are employed by our Data Science team to build models grounded by physical laws. With these principles as a starting point, models are customized and tuned for specific ships using the available data.

Historical Data and Machine Learning

In addition to first-principles, our team leverages historical data to estimate parameters such as the wind resistance coefficient in a statistical or machine learning framework. Building off standard naval-architectural vessel performance models, we have developed a number of model frameworks that describe how a vessel is expected to perform as dynamic factors, such as weather or loading conditions, change day-to-day or voyage-to-voyage. These model frameworks are then tuned to match the specific performance characteristics of individual ships by utilizing machine learning methods. Typically, these perform best when large amounts of historical data are available. Nautilus Labs has also developed methods where, in the absence of large historical datasets for a particular vessel, we can augment the limited available data with additional historical data from similar ships, without assuming that each ship has an identical performance profile. This is described in detail in a previous White Paper.

Practical Applications: Case Study on Hull Fouling Identification and Impact

Based on real-time data streams coming from a vessel, our models can be leveraged to identify when performance has dropped below a defined threshold level, alerting users to the need for a hull inspection or cleaning.

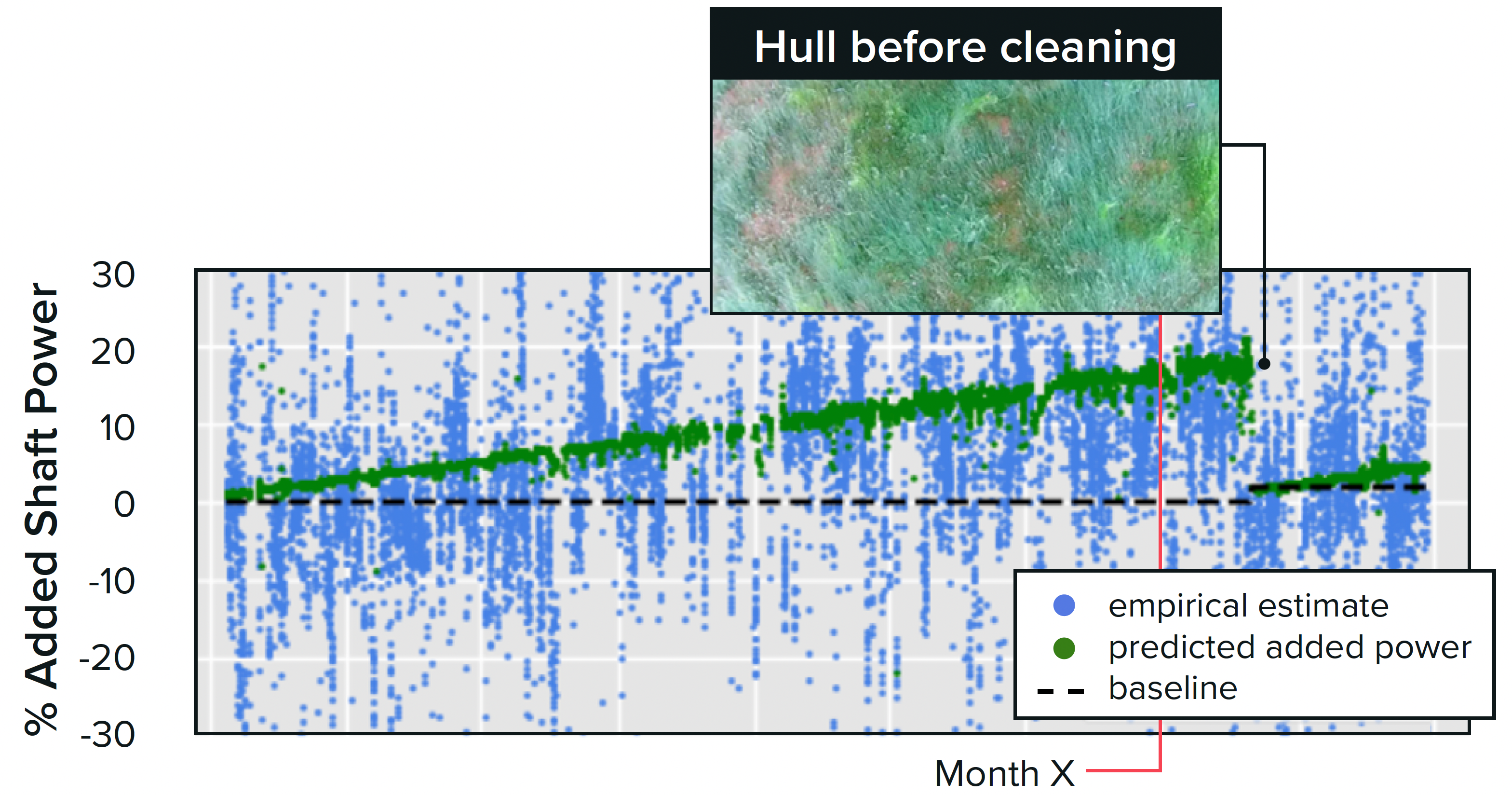

In this case study, the model for a particular vessel allowed Nautilus to demonstrate the need for a hull cleaning by highlighting the added shaft power required due to hull fouling using a “clean vessel” Digital Twin.2 In the graph below, the green line shows Nautilus’s model’s prediction of the added power due to hull fouling and the blue dots show the raw data used to compute the prediction. The dotted black line represents the baseline performance of the vessel as of the most recent hull cleaning or dry-dock.

As of Month X, Nautilus’s model estimated that hull fouling was causing the vessel to require approximately 20% more propulsive power then it would require in a clean state. When the Owner ordered an inspection of the ship, they observed the fouling pictured in the image below. After cleaning the hull, the vessel’s performance significantly improved which can be seen in the drop in % Added Shaft Power after the cleaning. Our Digital Twin also showed that while cleaning did indeed improve performance, the baseline of required shaft power was higher than the earlier baseline period due to natural performance degradation from wear and tear.

By identifying hull fouling at an early stage, the Owner was able to take swift action and clean the hull before fouling could have a large impact on the vessel’s performance, which would have resulted in thousands of extra dollars spent on fuel.

Model Validation and Transparency

No model is perfect, and understanding the strengths and limitations of a particular model is key to applying model predictions in ways that ultimately improve business outcomes. It is also critical to understand any model weaknesses so that investments in model development, data quality improvements, and new data collection can be applied most effectively.

The Nautilus Data Science team uses three key complementary approaches to validate model accuracy:

- First, we compare model predictions to historical data observations using industry-standard statistical and data science methods.

- Next, we validate model predictions for consistency with naval-architectural relationships, including how models interpolate and extrapolate predictions into areas of the domain where limited observations are available.

- Finally, we continually evaluate how models perform in practice on real voyages.

Evaluation of model performance against historical data is a common problem across industries, regardless of what data is used or what a model is predicting. The underlying idea is that a model’s predictions should be consistent with what actually happens in the real world. The historical data we have is what tells the model, and us, about how the real world operates, so our model predictions need to be consistent with our historical data.

Empirical Validation against Data Science Standards

We use industry-standard metrics R2 and RMSE to quantify the accuracy of our models based on empirical (data-driven) methods:

- R-squared (R2): R2 is a statistical measure that represents the proportion of the variance for a dependent variable that is explained by an independent variable or variables in a regression model.

- Root Mean Square Error (RMSE): RMSE is the standard deviation of the residuals (prediction errors).

Our team ensures that our models do not just accurately reflect the data we have available for training, but also reflect how the vessel operates in the real world, in future conditions, or past conditions that are not part of the available training data. In the worst-case scenario, a poor-quality model could simply “memorize” what happened in the historical past, with no ability to generalize to future conditions. We can avoid this, or at least detect if this is a problem, using a methodology called cross-validation, where we withhold a subset of our training data from the model training process, and use it afterwards to ensure model accuracy on “out-of-sample” data. This simulates what happens when we collect new, previously unseen, data in the future. Cross-validation is critical to ensure high-quality and accurate Digital Twins not just when training models, but also when Digital Twins are later being used in business-critical systems and workflows.

Consistency with Naval-Architectural Theory

In addition to comparing historical observational data to model predictions on a point-by-point basis, we can also investigate hypothetical situations. By comparing how model predictions change as potential weather or loading conditions change, we can confirm that model predictions change in ways that are consistent with naval-architectural theory. As a simple example, when a vessel encounters a stronger head-wind, we expect that the vessel’s Speed Through Water (STW) would decrease, given that other operational parameters such as Shaft Speed (RPM) remain constant.

In addition to investigating how model predictions, such as STW, change as individual inputs (such as draft) evolve, we also look at more complex relationships between multiple model parameters. For example, while increasing draft alone is expected to increase vessel fuel consumption, this effect should be larger at higher speeds. These physically-grounded model validation checks are even more important in regions of the modeling domain where data is less abundant. Where data is limited, a model needs to interpolate between, or even extrapolate from the available data. A strong naval-architectural foundation ensures that Nautilus’s models are accurate through the full range of possible weather and loading conditions, even those for which we have limited historical analogs.

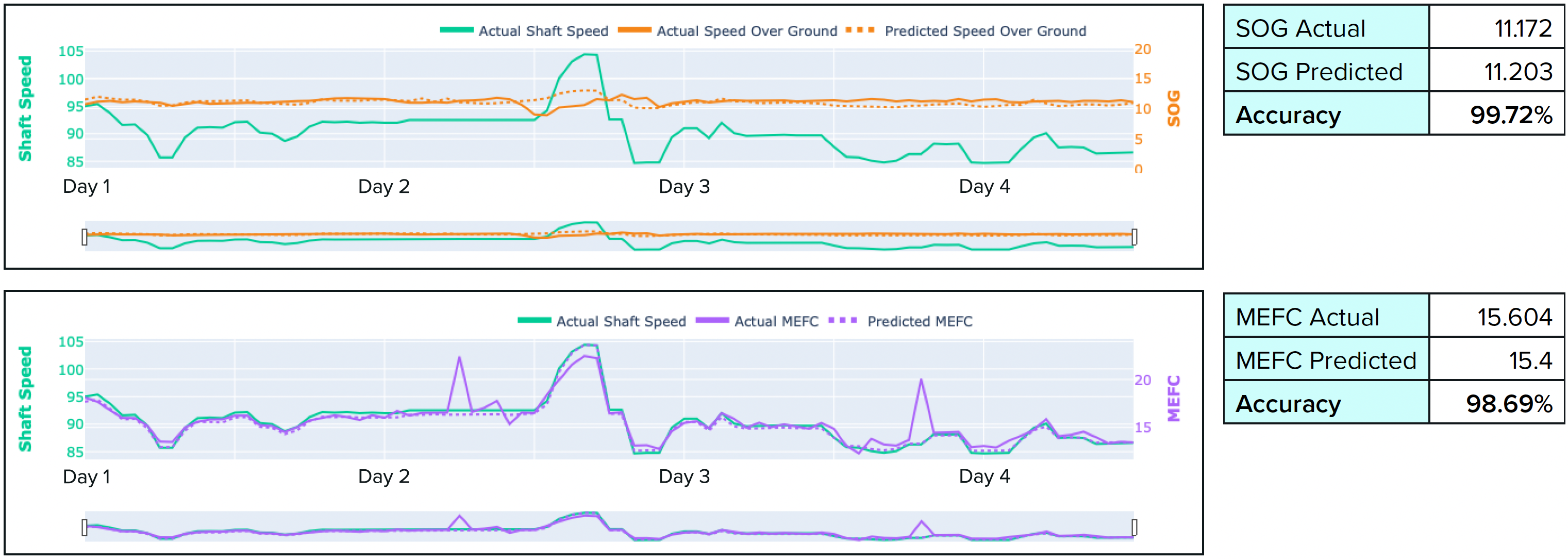

Rolling Hindcast Accuracy Assessment

After validating accuracy on observed historical data, as well as confirming consistency with physical expectations, we also validate model accuracy on live voyages and voyage optimizations. Broadly speaking, there are three categories of variables that can influence the accuracy of voyage optimization predictions: 1) weather forecast accuracy, 2) shaft speed and routing changes, and 3) Digital Twin model quality. With Nautilus’s Rolling Hindcast Accuracy Assessment, we can control for the impact of the first two categories of variables and isolate the predictive accuracy of Digital Twin models on an hourly basis. This methodology compares our simulations as of the “zero hour weather forecast”3 against the actual results that materialize.

- We simulate every hour of the voyage segment using the “zero hour weather forecast” and the actual shaft speed corresponding to each hour as data inputs.

- With those inputs, we simulate the predicted Speed Over Ground (SOG) and Main Engine Fuel Consumption (MEFC) for each hour.

- We then compare our predictions against the actual SOG and MEFC for each hour to determine how accurate our predictions were (based solely on the accuracy of our Digital Twins, having isolated for the effects of weather forecast accuracy and shaft speed and routing changes) against the actual results.

Our benchmark for prediction accuracy is between 98 to 99% on a voyage-aggregate basis for vessels with high-frequency sensor data. In the scenario depicted in the graphs below, we achieved 99.72% prediction accuracy for SOG and 98.69% prediction accuracy for MEFC on a vessel with high-frequency sensor data.

Conclusion

By leveraging empirical data and the laws of physics, we have created highly-accurate vessel-specific models that allow our clients to predict the outcomes of a voyage ahead of time and make operating decisions to optimize for their commercial and environmental goals. We believe in transparency and building trust in our Data Science excellence with our clients and end-users to support organizational change management.

The implications of Nautilus’s Digital Twin technology for the maritime industry are significant, enabling shipping companies to improve vessel performance, reduce fuel consumption, and enhance environmental sustainability, all while minimizing costs and improving commercial bottom lines. Our technology provides the most accurate vessel models and the most rigorous validation methods in the industry, empowering Operators to make data-driven decisions that optimize performance, reduce costs, and enhance environmental responsibility. By leveraging the power of our Digital Twin technology, shipping companies can accelerate decarbonization efforts, meeting the International Maritime Organization’s targets to reduce the annual greenhouse gas emissions created by the shipping industry by at least 40% by 2030 and 70% by 2050,4 while also achieving greater operational efficiency and profitability. Nautilus Labs is committed to supporting the maritime industry in achieving these goals, and our technology is a critical tool to help shipping companies reduce their environmental impact and enhance commercial competitiveness simultaneously.

Want to learn more? Reach out to us here.

1A ship’s “current state” refers to its status as of any given moment. This is impacted by a number of variables such as geographic position, speed, hull fouling, vessel condition, weather, and more.

2 A Digital Twin of a clean vessel provides a baseline for vessel performance unimpacted by fouling. This allows us to calculate the impact of fouling on vessel performance.

3 Weather forecasts change over time because they tend to become more accurate the closer the forecast is to the forecasted period i.e. a weather forecast for one hour into the future is more accurate than a weather forecast for one day into the future. The “zero hour weather forecast” refers to the weather forecast for a particular hour as of the start of that hour.

4 “Initial IMO GHG Strategy,” International Maritime Organization, accessed May 4, 2023, www.imo.org/en/MediaCentre/HotTopics/Pages/Reducing-greenhouse-gas-emissions-from-ships.aspx.