By Zachary Tessler, Director of Data Science

Download the PDF version of this whitepaper here.

Executive Summary

The shipping industry is facing enormous pressures to decarbonize, and must do so while preserving bottom lines. While noon reports have been an industry standard for centuries, new data collection methods such as high-frequency data sensors have given owners and operators unparalleled amounts of data. This study evaluated Data Science metrics that measure the accuracy of simulations built with three types of data sets: i) models based on noon reports only; ii) models based on high-frequency sensor data; and iii) models based on a combination of a vessel’s noon reports enriched with high-frequency sensor data from similar vessels in the Nautilus data pool. The paper found that while models built on high-frequency sensor data yield the most accurate simulations, if high-frequency data is not available, results from noon-only models can be greatly improved by enrichment with similar vessels’ sensor data.

Ocean shipping is the behemoth of global trade, accounting for 90 percent of all goods transported worldwide. Many current industry practices were inherited from the industry’s decades-long history. Information was traditionally siloed between various stakeholders with no streamlined approach to enable collaboration and better decision making. These operational inefficiencies have contributed to shipping becoming responsible for 3 percent of total greenhouse gas emissions today, and if left unchecked, the industry is projected to be responsible for 17 percent of global carbon dioxide (CO2) emissions by 2050.1

For the shipping industry to remain viable, decarbonization is non-negotiable.

The International Maritime Organization (IMO) has set a target to reduce the annual greenhouse gas emissions created by the shipping industry by at least 40 percent by 2030 and 70 percent by 2050.2 One measure to accomplish this is the Carbon Intensity Indicator (CII), a rating system that will grade a ship’s carbon efficiency effective January 2023. As the climate changes, consumers are also becoming more discerning about the carbon footprints of products they buy. From top-down government regulations to bottom-up market pressures, the heat is on to decarbonize the supply chain. Shipping companies play an integral role in achieving decarbonization goals.

A market-ready solution built around your existing infrastructure to maximize commercial returns while minimizing carbon emissions.

Machine learning and artificial intelligence are technologies that enable vessel owners and operators to keep up with the pace of change required to comply with regulations, Charter Party agreements, and remain profitable and achieve fleet efficiency. The ‘engine’ that powers these technologies are algorithmic models. The overarching goal of shipping companies to maximize fleet efficiency requires a solution that is scalable fleet-wide, and this requires voyage optimization on the voyage level, performance management on the vessel level, and simulations on a fleet level. With the right quality and quantity of data, highly effective models can be built for all these tasks.

Powered by billions of data points, Nautilus leverages machine learning and naval architectural principles to generate ship-specific performance models. These models allow owners and operators to understand true vessel performance and can predict a ship’s behavior in any given environment. Digital twins are the baseline for performance analytics and Nautilus’s Voyage Optimization recommendations that take additional third party data into account.

Nautilus makes data-driven decarbonization and profit optimization a reality for all owners and operators.

Nautilus’s approach to optimized fleet efficiency leverages the combined power of human and machine intelligence to make vessels and voyages as efficient as they can be regardless of the hardware or software with which a vessel is equipped. Our approach to data modeling, the backbone of our insights and recommendations, incorporates both noon report data and high-frequency sensor data (HFD) to generate the industry’s most accurate digital twins. The two types of data provide different benefits:

While noon report data has its limitations3, and high-frequency data provides the most reliable source of truth, many vessels are not yet equipped with high-frequency sensors. Today’s solutions have to work on a fleet-wide scale, regardless of data availability, for true efficiency.

At Nautilus, we fit our solutions into our clients’ existing infrastructures and deliver Voyage Optimization around your business needs. Regardless of the data source, noon reports or high-frequency sensors, we can make the available data work harder by augmenting data points with data from the Nautilus data pool. This approach allows us to combine the best of both worlds: ship-specific data from individual vessels, and macro-level data from the ocean’s fleet.

With data, the sum is greater than the whole of its parts. Nautilus contextualizes, verifies, and augments your noon data with billions of supporting data points from other vessels.

Just as a ship can only operate as powerfully as the strength of its motors, predictive machine learning technologies can only forecast as accurately as the quality of the underlying dataset. By taking ship-specific data (Vessel X) and supercharging it with data from comparable ships (Vessels Y and Z), we can make improved predictions about the original ship in areas where Vessel X lacked data. While no two vessels are exactly alike, ocean-faring ships are bound by the same physics and design constraints. This means that if we identify vessels that share similar characteristics with Vessel X, such as Vessels Y and Z – and have high quality data from the latter two vessels – algorithms can then make reliable predictions about Vessel X by cross-referencing the performance of the similar Vessels Y and Z.

Through the richness of the Nautilus sensor and noon database, models can be trained to identify patterns and anticipate future outcomes for a breadth of weather or operating conditions. When accompanied by in-depth ship-specific data (whether from noon or sensors), the model can then be tailored to accurately predict the performance of that particular ship.

Case Study

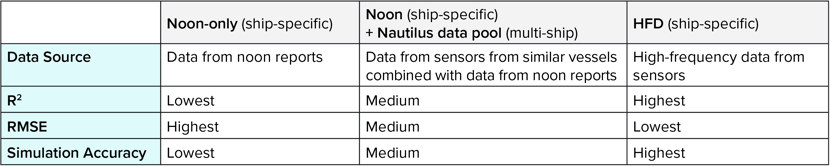

Nautilus undertook a study of 18 vessels to validate and compare the accuracy of the modeling approaches:

- “Noon + Nautilus data pool”, a multi-ship approach where data from a ship’s noon reports was augmented with data from the Nautilus data pool from similar ships’ sensors,

- “HFD”, where data from the ship’s high-frequency sensors was used, and

- “Noon-only”, where data from the ship’s noon reports were used.

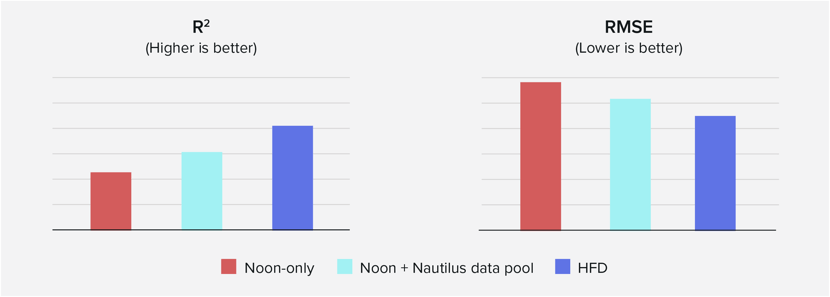

The team used industry standard Data Science metrics R2 and RMSE to compare the results of the three approaches and made the following findings:

- “HFD”, which exclusively utilized sensor data from the target ship, reliably yielded the most accurate predictions.

- For every vessel, “Noon + Nautilus data pool” improved on the accuracy of “Noon-only” by a significant margin:

- “Noon + Nautilus data pool” provided 62% of the benefits of “HFD”, without the increased ship-specific sensor data requirements (RMSE).4

- “Noon + Nautilus data pool” improved the predictive power over “Noon-only” by 33% (R2).5

These findings corroborate the statement that vessels without sensors relying on noon reports, and thus ineligible for the “HFD” approach, would receive more accurate predictions by leveraging techniques based on the “Noon + Nautilus data pool” compared to using “Noon-only” data. While ship models often vary in accuracy to some degree due to differences in data quality and quantity, regardless of the performance of a ship’s “Noon-only” model, we find substantial improvements in model performance through “Noon + Nautilus data pool” methods.

Better data means voyages are optimized for better commercial returns and better CII.

Where the quality or quantity of data is limited, this augmented modeling approach produces high-accuracy, vessel-specific performance models built by enriching data from each ship with data from wider datasets.

In this study of 18 vessels, the inclusion of high-frequency data has shown to result in the most accurate and reliable models. Where an individual vessel has no sensor data available, the most effective models can be produced by combining the vessel’s noon data with high-frequency data from a wider database like Nautilus Platform.

For ship owners or charterers, the bottom line of high quality performance models boils down to well-informed decisions that result in fuel savings, lower carbon emissions, and improved voyage outcomes. This approach allows shipping businesses to optimize their entire fleet based on accurate predictions, even if noon reports are the only available data source. In the end, focusing on model quality is not enough. Data ingestion, data quality assurance, simulation accuracy, optimization quality, third party data sources, ease of user experience, support from Managed Services and/or Client Success are also integral to commercial decarbonization endeavors.

Owners and operators can leverage Nautilus regardless of their available data sources to better pursue their decarbonization and financial goals. With the “Noon + Nautilus data pool” modeling method, fleet-wide optimization can be achieved for every vessel.

If you would like to understand more about our Optimization-as-a-Service solution, please reach out to us.

1 Anna Phillips, “Ship pollution is rising as the U.S. waits for world leaders to act,” The Washington Post, June 6, 2022, www.washingtonpost.com/climate-environment/2022/06/06/shipping-carbon-emissions-biden-climate/.

2 “Initial IMO GHG Strategy,” International Maritime Organization, accessed August 18, 2022, www.imo.org/en/MediaCentre/HotTopics/Pages/Reducing-greenhouse-gas-emissions-from-ships.aspx.

3 Antonios Tzamaloukas, Stefanos Glaros, and Kerasia Bikli, “Different Approaches in Vessel Performance Monitoring, Balancing Accuracy, Effectiveness & Investment Aspects, from the Operator’s Point of View,” HullPIC ‘18, 151, http://data.hullpic.info/hullpic2018_redworth.pdf.

4 Root Mean Square Error (RMSE) is the standard deviation of the residuals (prediction errors).

5 R-squared (R2) is a statistical measure that represents the proportion of the variance for a dependent variable that’s explained by an independent variable or variables in a regression model.